An introduction to neural radiation fields and their applications

What was done before NeRF?

Deep learning before this was essentially done a lot on 2D data, which is essentially what we call Euclidean data. Some common applications of deep learning on 2D data include classification, regression-based predictions, and segmentation.

Nowadays, however, research in deep learning has extended to 3D data, which is non-Euclidean in nature. The goal in this case would be to create a 3D model from Euclidean data after training for a finite amount of time for a given number of epochs. This brings us to NeRF!

What is NeRF?

NeRF (Neural Radiance Field) is essentially a neural network that is trained to generate a set of new 3D images or a 3D representation of an object based on a set of mostly limited 2D images. It takes input scene images and interpolates between them to generate a complete scene.

An insight into the math behind it and how it works:

NeRF is a neural network that basically takes 5D coordinates with parameters such as spatial location (x,y,z) and viewing direction (θ, φ) and outputs the opacity and color, which is 4D. This process uses classic volumetric rendering techniques to bring out the densities and colors in an image.

Here I will explain this using code written in PyTorch 3D.

The following steps should be followed to create and train a simple neural radiation field:

- Initialize the implicit renderer

- Define the neural emission field model

- Set the broadcast field

Initialize the implicit renderer:

An implicit function φ can be defined as:

This basically maps 3D points to a scalar value.

An implicit surface, S can be defined as:

Thus, an implicit surface, S is the set of all 3D points ‘x’ whose value of f(x)=τ.

So for each point we evaluate f(x) to determine whether it lies on the surface or not. We then use a coloring function c(x) where

Thus,

which upon convergence we get

and here,

to assign colors to individual ray points. The Raymarching step is then performed, where all f(x) and c(x) are collapsed into a single pixel color on the given ray that is emitted by the camera.

Thus, the renderer consists of a Raysampler and a Raymarcher. Raysampler emits rays from the pixels of the image and samples points on them. Some common examples of Raysamplers used in PyTorch 3D include NDCGridRaySampler() and MonteCarloRaySampler(). Raymarcher then takes the densities and colors sampled from each ray and converts each ray to the color and opacity value of the ray’s output pixel. An example of a Raymarcher is EmissionAbsorptionRaymarcher().

Define the neural emission field model:

The Neural Radiance Field module defines a continuous field of colors and opacities over the 3D domain of the scene.

(The above is simply a neural emission field used below example.)

We also need to define a function to perform the NeRF feed-forward, which receives input as a set of tensors that parameterize a bundle of rays to render. This raypack is first converted to a set of 3D raypoints in the world coordinates of the scene. We then use harmonic embeddings, which essentially tap into the color and opacity branches of the NeRF model, to label each point with a 3D vector and a 1D scalar that varies between [0,1] which define the color and opacity of a particular point respectively, resulting in our 4D result.

We then need to run an optimization loop that will fit the emission field to our observations.

Set the broadcast field:

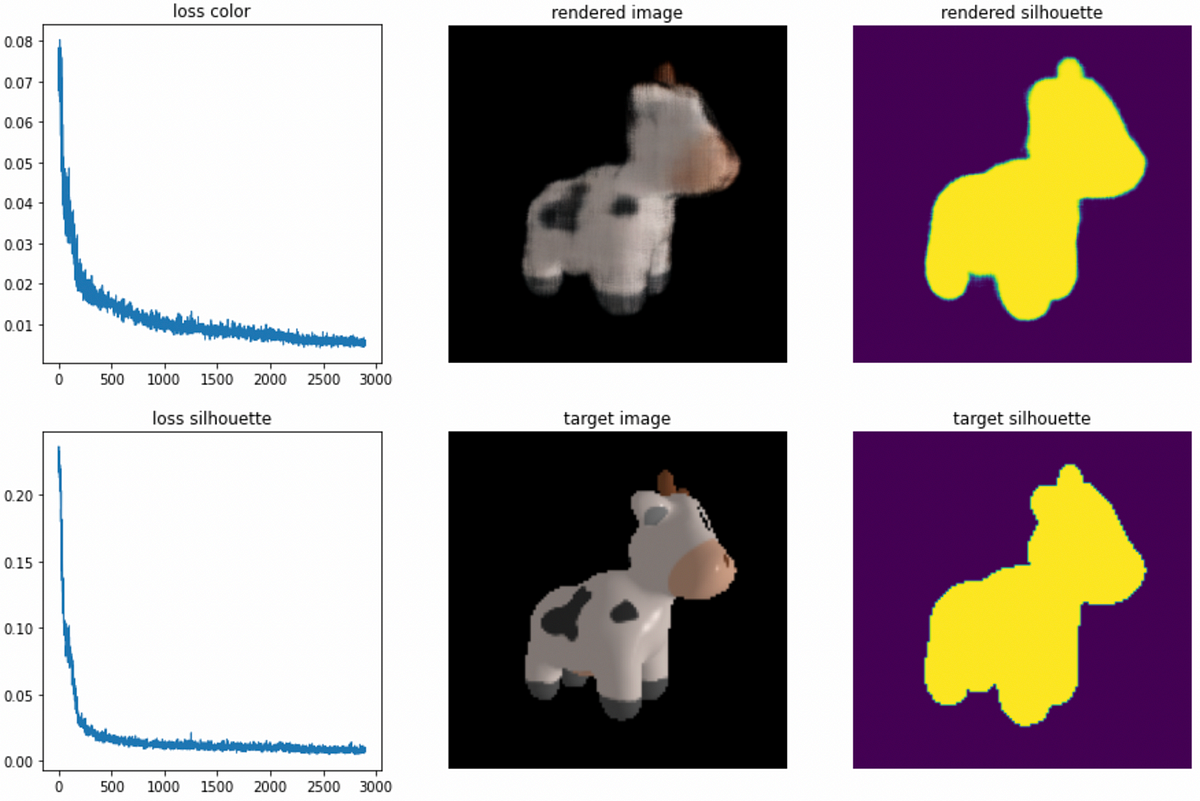

We then fit the emission field by imaging it from the cameras’ viewpoints and then compare the results with the observed target images and target silhouettes as indicated in code.

Training proceeds as follows for 3000 iterations by default:

and the final output looks something like this:

Some disadvantages and newer techniques

The original model was slow to train and could only handle static scenes. It is inflexible in the sense that a model trained for a particular scene cannot be used for another scene. However, there are various methods that have improved upon the original NeRF concept, such as NSVF, Plenoxels, and KiloNeRF.

Thank you for making it this far! Please feel free to follow me LinkedIn , GitHub and Average and stay tuned for future content!

https://becominghuman.ai/its-nerf-or-nothin-ad9e61c66290?source=rss—-5e5bef33608a—4